What is Responsible AI?

Movies depicting AI as world dominators, like The Terminator, can create an exaggerated view of this technology. Given the buzz around AI and its complexity, it’s natural to be cautious, especially if you’ve ever watched any of the AI fear-mongering movies. Although you don’t have to worry about AI taking over the world, there are valid concerns about the possible societal consequences of this technology. That is why the concept of responsible AI was created to ensure that organizations are developing technology in an ethical and safe manner.

So, what is responsible AI?

Responsible AI is an approach to developing and deploying AI systems in a secure, ethical, and transparent manner. It is a precaution that must be taken, as AI systems are a result of decisions made by developers and other individuals, which can lead to personal biases being reflected in the systems. A biased AI system can impact the lives of many, such as unfairly declining loan applications or inaccurately diagnosing a patient. Thus, having set principles can guide those who have a hand in creating AI towards more equitable decisions.

Benefits of responsible AI

There are four key benefits to implementing this type of governance:

- Trust and adoption: Accenture’s 2022 Tech Vision research found that only 35% of global consumers trust how AI is being implemented by organizations. As organizations start to scale AI, they need to show their commitment to responsible AI to win over consumer trust.

- Risk reduction: As algorithms can be biased and unrepresentative of the population, responsible AI can mitigate the harm caused by unjust AI conclusions.

- Privacy protection: It addresses privacy concerns, ensuring that organizations comply with data protection laws.

- Culture of feedback: Instilling the principles of responsible AI in your organization creates an open environment where employees feel empowered to raise concerns about the ethicality of the AI systems they’re working on.

Responsible AI in action

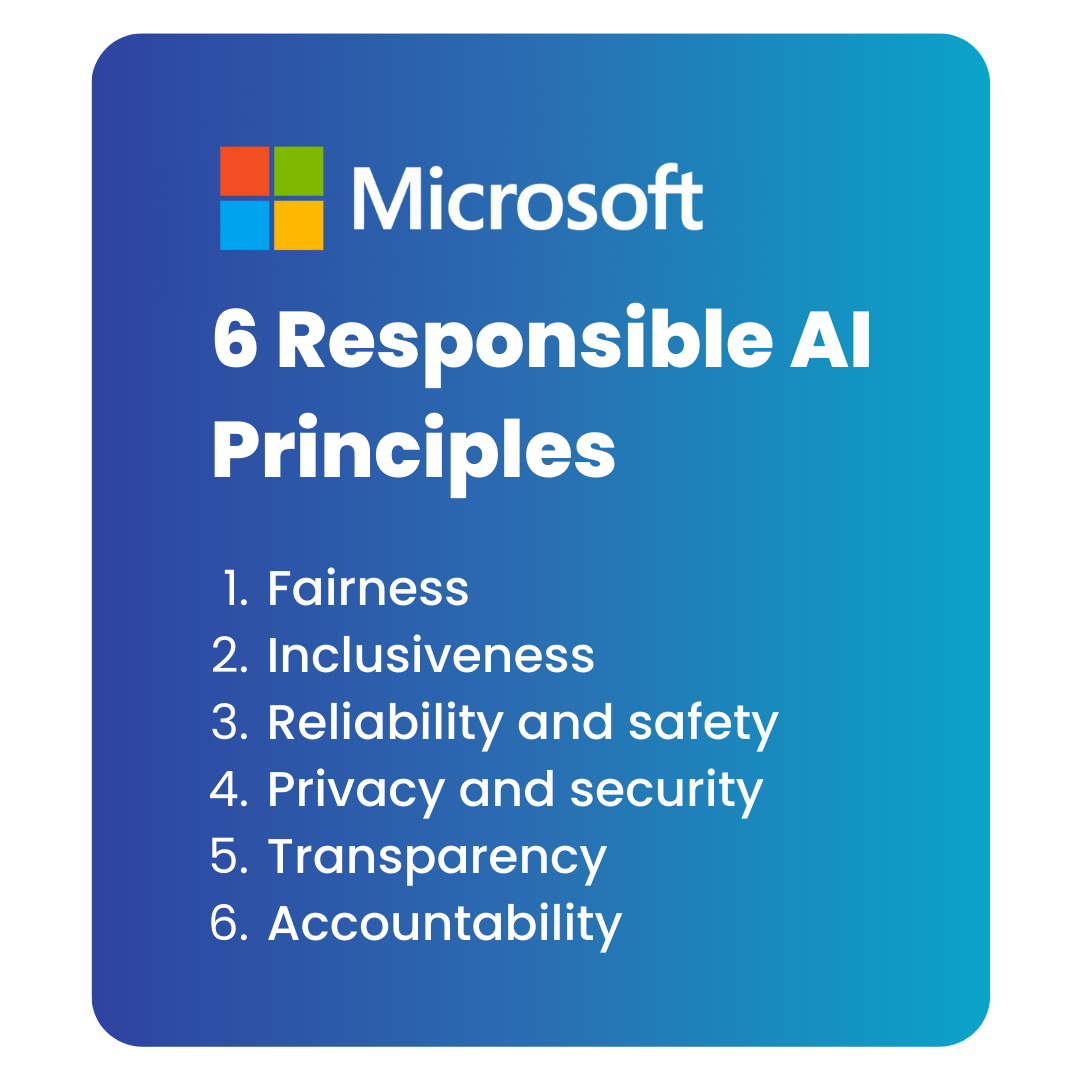

Microsoft has six principles to guide AI development and use that have set the industry standard for what responsible AI should be. Here is a breakdown of the principles and how Microsoft applies them by integrating a Responsible AI dashboard with Azure Machine Learning.

- Fairness: AI systems should treat all people fairly.

- Inclusiveness: AI systems should empower everyone and engage people.

Application to Azure ML: The fairness assessment component of Microsoft’s Responsible AI dashboard allows data scientists and developers to assess model fairness. This includes how the model’s predictions affect diverse groups of people.

- Reliability and safety: AI systems should perform reliably and safely.

Application to Azure ML: The error analysis part of the Responsible AI dashboard helps to view and understand how errors are distributed in the dataset. These errors may occur when the model underperforms for a specific demographic.

- Privacy and security: AI systems should be secure and respect privacy.

Application to Azure ML: AI systems must comply with privacy laws that require transparency about the collection, use, and storage of data and give consumers the option to choose how their data is used. Administrators can configure Azure Machine Learning to follow their company’s policies. This includes restricting access to resources or scanning for vulnerabilities.

- Transparency: AI systems should be understandable.

Application to Azure ML: The Responsible AI dashboard includes the following features that tackle transparency: model interpretability and counterfactual what-ifs.

Model interpretability helps to understand the model’s predictions and how they were made. For example, how was a customer’s loan application approved or rejected? Counterfactual what-ifs address what the model would predict if you changed the inputs. For example, how changing attributes like gender and ethnicity would affect model predictions and if it would lead to biased answers.

- Accountability: People should be accountable for AI systems.

Application to Azure ML: Azure Machine Learning provides machine learning operations capabilities to increase accountability. These capabilities include being notified and alerted on events in the machine learning life cycle, like experiment completion, model registration, and model deployment or monitoring any operational issues.

How to create an ethical culture

The building blocks to enacting responsible AI starts with the following:

- Governance and rules: Set up a Responsible AI mission and guiding principles to provide clear expectations across the organization in the use and creation of AI technology.

- Training and practices: Educate your employees on the principles and structures put in place to ensure compliance with the rules.

- Tools and processes: Develop tools and techniques and embed them into the AI systems to support responsible AI values, drawing inspiration from industry leaders like Microsoft. Set processes in place to monitor AI and data and privacy laws and regulations to ensure compliance.

Developing a productive AI ecosystem

In the fast-growing world of artificial intelligence, responsible practices are not just a choice; they are imperative. By prioritizing fairness, transparency, and ethical compliance, organizations can build AI systems that benefit humanity while minimizing harm. Use responsible AI principles as a compass to guide your ongoing and future AI efforts to create technology that serves all, leaving no one behind.

Related Posts

Subscribe our newsletter

Enter your email to get latest updates.