Author

Carolyn Gjerde

The Copilot Success Series

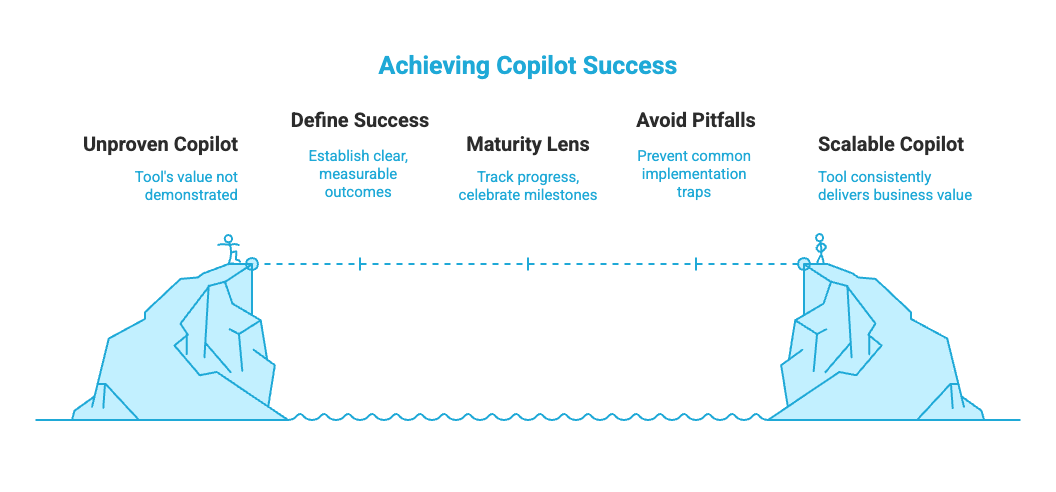

This article is the third in our four-part series on making Microsoft 365 Copilot succeed. In Blog 1, we explored why most AI pilots fail. In Blog 2, we introduced the playbook of alignment, trust, and adoption. Today, we focus on the question leaders ask most: what does Copilot success actually look like and how will we know when we've achieved it?

Success, Defined (Without the Hype)

New features make headlines. Results keep budgets. Success with Copilot isn't about novelty; it's about outcomes employees feel, leaders can measure, and the business can scale.

Think of success through a few practical lenses:

- Productivity you can clock.

Examples: 25–30% reduction in meeting-recap time; drafting client emails/proposals in half the time; 10+ hours/month saved per knowledge worker.

- A better employee day.

Indicators: fewer repetitive tasks, faster "first drafts," less context-switching, higher satisfaction in pulse surveys.

- ROI with credibility.

Tie time saved to cost curves or revenue capacity (e.g., more proposals per quarter, faster sales cycles, higher billable utilization).

- Embedded in the workflow.

Evidence: Copilot prompts/checklists in standard operating procedures, playbooks in Teams/SharePoint, usage concentrated in target scenarios, not just sporadic clicks.

- Trusted and compliant by design.

Signals: clear policies people understand, reduced "shadow AI," fewer policy exceptions, auditability through Purview.

These lenses help you describe success in business terms so you can fund it, protect it, and grow it.

The Maturity Lens: Progress Over Perfection

There isn't one "right" end state. Success looks different depending on where you are today. Use a simple maturity lens to set expectations and celebrate real progress:

- Emerging: Pilots prove viability in 1–2 priority scenarios. Early wins are documented and shared. Guardrails are drafted.

- Developing: Governance is live; champions and prompt literacy programs are running. Metrics start to trend (time saved, adoption in target workflows).

- Scaling: Copilot is standard in core processes. Outcomes are reviewed quarterly. Playbooks expand to new functions with predictable results.

The goal isn't to "be perfect." It's to move deliberately from promising to repeatable.

Common Pitfalls (and How to Avoid Them)

Even strong efforts can stall. Watch for these traps:

- Vanity metrics. Counting raw usage instead of measuring outcomes in target workflows.

Fix: Define success metrics up front (time saved, quality, cycle time) and report them by scenario.

- Feature tours without workflow changes. Training people "about Copilot," but not how it fits into their day.

Fix: Publish role-based playbooks with prompts and before/after examples.

- Governance afterthoughts. Unclear data boundaries create hesitation—or risky behavior.

Fix: Make policies visible, simple, and scenario-specific; brief managers to reinforce them.

- Scattered pilots. Too many experiments, no center of gravity.

Fix: Prioritize 2–3 business-critical scenarios per quarter and go deep.

- Success not socialized. Wins stay local; momentum fizzles.

Fix: Share short, quantified stories (who/what/result) in leadership and all-hands forums.

How Leaders Turn “Good” Into “Scalable”

Technology enables; leadership scales. Executives accelerate impact when they:

- Name the few outcomes that matter (and stop the rest from diluting focus).

- Back governance early, visibly, and simply so people feel confident using Copilot.

- Fund champions and prompt literacy as core enablers, not nice-to-haves.

- Ask for outcome stories monthly, and celebrate the teams creating them.

- Keep the flywheel turning by expanding to the next scenarios once results are proven.

When leaders do this, Copilot moves from experiment to operating model.

Measuring What Matters (Fast, Fair, Repeatable)

A lightweight scorecard helps you track progress without creating reporting overhead:

- Leading indicators:

-

- % of target teams using Copilot in the defined scenarios

-

- % of champion-led sessions run; # of prompts/playbooks adopted

-

- % of employees trained in prompt literacy

- Outcome indicators:

-

- Time saved per scenario (e.g., meeting recaps, proposal drafts, research)

-

- Cycle-time reduction (e.g., from request to draft; from draft to approval)

-

- Quality proxies (e.g., fewer revisions, higher CSAT on deliverables)

- Trust indicators:

-

- Policy acknowledgment rates; flagged exceptions resolved

-

- Reduction in “shadow AI” use; Purview insights trending stable

Keep the scorecard small, publish it widely, and make it part of business reviews, not a side project.

The Bottom Line

Copilot success isn’t about proving the tool works. It’s about proving it matters consistently, to productivity, to employees, and to the business. Define success through clear lenses, pace it with a maturity mindset, avoid the common traps, and lead with outcomes.