Building a Festive Future with Responsible AI

Artificial Intelligence (AI) has emerged as a transformative force, promising groundbreaking advancements across industries. While AI has the potential to bring significant positive change, it’s also raised concerns regarding ethics, accountability, and fairness. To navigate this delicate balance, we must develop AI that aligns with responsible principles, ensuring it benefits humanity without any naughty side effects.

How to develop responsible AI?

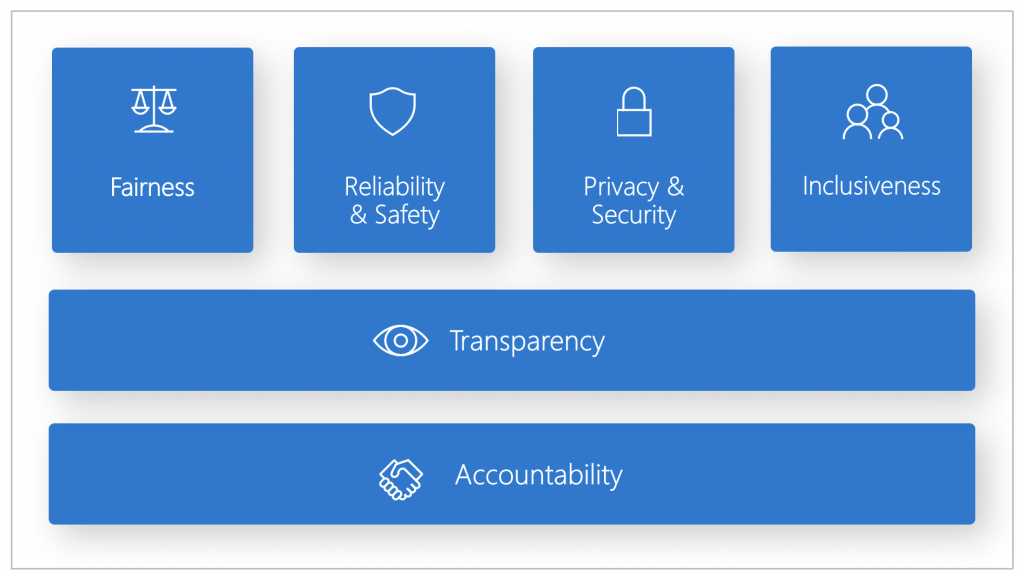

As a pioneer in developing AI solutions, Microsoft has laid out six fundamental principles for the development and use of responsible AI. These principles serve as a north star for creators and organizations committed to developing ethical AI:

- Fairness: AI systems should treat all people fairly, without bias or discrimination.

- Reliability & safety: AI systems should be tested to ensure they perform reliably and safely to avoid unintended harm.

- Privacy & security: AI systems should prioritize data security and respect user privacy.

- Inclusiveness: AI systems should empower and engage all individuals, promoting accessibility.

- Transparency: AI systems should be transparent and understandable to users.

- Accountability: Individuals should be held accountable for the outcomes of AI systems.

In the realm of artificial intelligence, inclusiveness is not just a feature; it’s a fundamental principle. The power of AI should be harnessed to empower and engage every individual, breaking down barriers and promoting accessibility for a truly inclusive future.

– Cristall Amurao, Business Applications Practice Lead of Creospark

But what does it mean to approach AI development responsibly?

Innovating responsibly

Responsible AI development starts with a people-centered mindset. While AI systems offer the potential for broad fairness and inclusivity, they also pose the risk of perpetuating biases. Achieving fairness is challenging due to data biases, the diversity of situations and cultures, and the absence of universal fairness definitions. That’s why it’s more crucial than ever to embrace diverse perspectives, foster continuous learning, and maintain agility in AI research and development. Just as Santa’s sleigh adapts to different chimneys, technology must remain adaptable and receptive to feedback to align with ethical principles.

Empowering others

Responsible AI development extends beyond the individual creator. It involves empowering organizations to cultivate a culture that prioritizes responsible AI. Implementing these principles should be integrated from the ground up, involving multidisciplinary research, shared learning, and innovation in tools and technologies – think of it as decorating a tree with various ornaments, each playing a crucial role in creating a festive atmosphere.

Fostering positive impact

Responsible AI developers should commit to ensuring that AI technology creates a lasting, positive impact for everyone. This means actively participating in shaping public policy, contributing to industry working groups, and supporting initiatives that address society’s most significant challenges.

Responsible AI development is more than a set of guidelines; it’s a collective commitment to ensuring that technology serves the best interests of humanity. As future creators of technology, it is our responsibility to uphold these principles and guide the development of AI in a manner that fosters trust, encourages innovation, and contributes to the betterment of society.

Related Posts

Subscribe our newsletter

Enter your email to get latest updates.